Towards multi-modal causability with Graph Neural Networks enabling information fusion for explainable AI

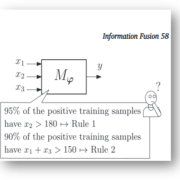

Our paper on Multi-Modal Causability with Graph Neural Networks enabling Information Fusion for explainable AI. Information Fusion, 71, (7), 28-37, doi:10.1016/j.inffus.2021.01.008

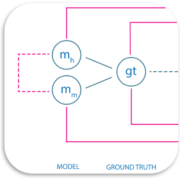

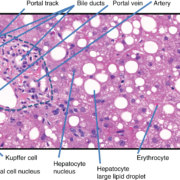

has just been published. Our central hypothesis is that using conceptual knowledge as a guiding model of reality will help to train more explainable, more robust and less biased machine learning models, ideally able to learn from fewer data. One important aspect in the medical domain is that various modalities contribute to one single result. Our main question is “How can we construct a multi-modal feature representation space (spanning images, text, genomics data) using knowledge bases as an initial connector for the development of novel explanation interface techniques?”. In this paper we argue for using Graph Neural Networks as a method-of-choice, enabling information fusion for multi-modal causability (causability – not to confuse with causality – is the measurable extent to which an explanation to a human expert achieves a specified level of causal understanding). We hope that this is a useful contribution to the international scientific community.

The Q1 Journal Information Fusion is ranked Nr. 2 out of 138 in the field of Computer Science, Artificial Intelligence, with SCI-Impact Factor 13,669, see: https://www.sciencedirect.com/journal/information-fusion